Hal yang harus disiapkan sebelum instalasi Hive ini yaitu:

- Java telah terinstall.

- Hadoop harus sudah terinstall dan hadoop yang telah di klaster.

Proses Instalasi

1. Download Apache Hive versi 3.1.2

# wget https://www-eu.apache.org/dist/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

atau

# curl -O https://www-eu.apache.org/dist/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

2. Kemudian Extract dan pindahkan direktorinya dsini saya menaruh pada direktori /opt yaitu direktori utama aplikasi hadoop yang telah saya install

# tar xvzf apache-hive-3.1.2-bin.tar.gz

# mv apache-hive-3.1.2-bin -C /opt/

3 Setelah itu buat environment variable dengan

# nano /etc/profile

Tambahkan dibawahnya

export HIVE_HOME=/opt/apache-hive-3.1.2-bin

export HIVE_CONF_DIR=/opt/apache-hive-3.1.2-bin/conf

export PATH=$PATH:$HIVE_HOME/bin

export CLASSPATH=$CLASSPATH:/opt/hadoop/lib/*:.

export CLASSPATH=$CLASSPATH:/opt/apache-hive-3.1.2-bin/lib/*:

# ln -sf /etc/profile /root/.bashrc

# source /etc/profile

4. Setelah itu kita juga download derby versi 10.13.1.1 dengan perintah

# wget http://archive.apache.org/dist/db/derby/db-derby-10.13.1.1/db-derby-10.13.1.1-bin.tar.gz

atau

# curl -O http://archive.apache.org/dist/db/derby/db-derby-10.13.1.1/db-derby-10.13.1.1-bin.tar.gz

5 kemudian extract dan pindahkan juga pada direktori utama aplikasi-aplikasi seperti hadoop, kafka, flume dll

# tar xvzf db-derby-10.13.1.1-bin.tar.gz -C /opt/

6. Kemudian setting environment variabel derby

#nano /etc/profile

Tambahkan

export DERBY_HOME=/opt/db-derby-10.13.1.1-bin

export PATH=$PATH:$DERBY_HOME/bin

export CLASSPATH=$CLASSPATH:$DERBY_HOME/lib/derby.jar:$DERBY_HOME/lib/derbytools.jar

# mkdir $DERBY_HOME/data

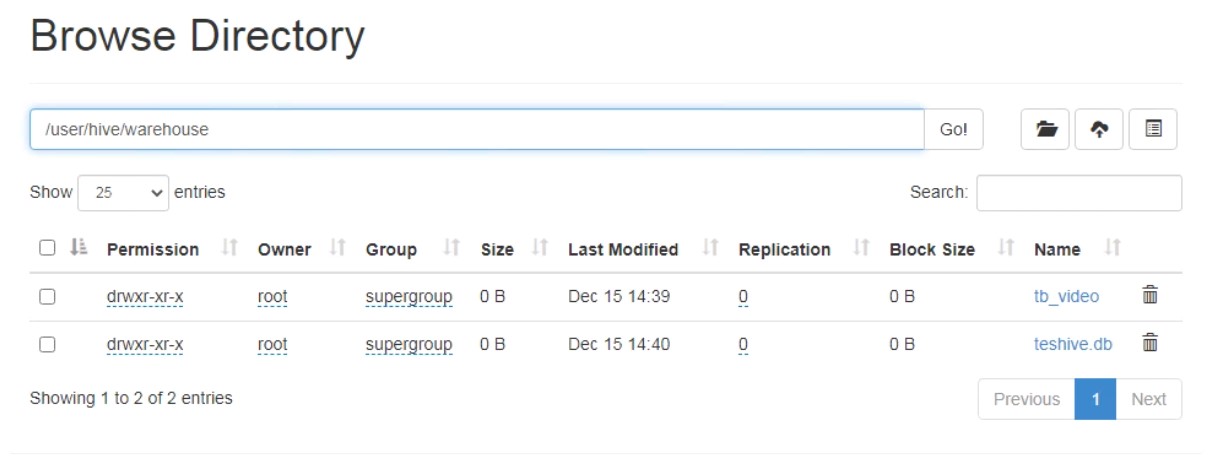

7. Sebelumnya kita buat direktori hadoop yaitu warehouse

# hdfs dfs -ls /

# hdfs dfs -mkdir -p /hbase

# hdfs dfs -mkdir -p /tmp

# hdfs dfs -mkdir -p /user/hive/warehouse

# hdfs dfs -chmod g+w /tmp

# hdfs dfs -chmod g+w /user/hive/warehouse

8. Setelah itu kita konfigurasi hive-env.sh

# cd $HIVE_HOME/conf

# cp hive-env.sh.template hive-env.sh

# nano hive-env.sh

lalu tambahkan

export HADOOP_HOME=/opt/hadoop

9. kita konfigurasi hive-site.xml

# cd $HIVE_HOME/conf

# nano hive-site.xml

kosong lalu tambahkan

<?xml version="1.0" encoding="UTF-8" standalone="no"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--Licensed to the Apache Software Foundation (ASF) under one or morecontributor license agreements. See the NOTICE file distributed withthis work for additional information regarding copyright ownership.The ASF licenses this file to You under the Apache License, Version 2.0(the "License"); you may not use this file except in compliance withthe License. You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License.--><configuration><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:derby:;databaseName=/opt/apache-hive-3.1.2-bin/metastore_db;create=true</value><description>JDBC connect string for a JDBC metastore.To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.</description></property><property><name>hive.metastore.warehouse.dir</name><value>/user/hive/warehouse</value><description>location of default database for the warehouse</description></property><property><name>hive.metastore.uris</name><value/><description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>org.apache.derby.jdbc.EmbeddedDriver</value><description>Driver class name for a JDBC metastore</description></property><property><name>javax.jdo.PersistenceManagerFactoryClass</name><value>org.datanucleus.api.jdo.JDOPersistenceManagerFactory</value><description>class implementing the jdo persistence</description></property></configuration>

10. Setelah itu buat file jpox.properties pada direktori yang sama dengan hive-site.xml

lalu isi dengan

javax.jdo.PersistenceManagerFactoryClass =org.jpox.PersistenceManagerFactoryImplorg.jpox.autoCreateSchema = falseorg.jpox.validateTables = falseorg.jpox.validateColumns = falseorg.jpox.validateConstraints = falseorg.jpox.storeManagerType = rdbmsorg.jpox.autoCreateSchema = trueorg.jpox.autoStartMechanismMode = checkedorg.jpox.transactionIsolation = read_committedjavax.jdo.option.DetachAllOnCommit = truejavax.jdo.option.NontransactionalRead = truejavax.jdo.option.ConnectionDriverName = org.apache.derby.jdbc.ClientDriverjavax.jdo.option.ConnectionURL = jdbc:derby://hadoop1:1527/metastore_db;create = truejavax.jdo.option.ConnectionUserName = APPjavax.jdo.option.ConnectionPassword = mine

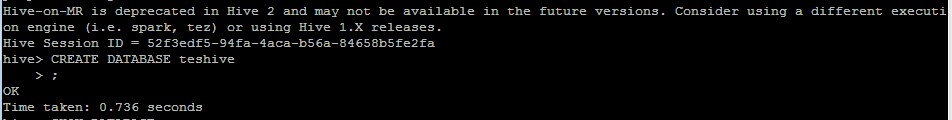

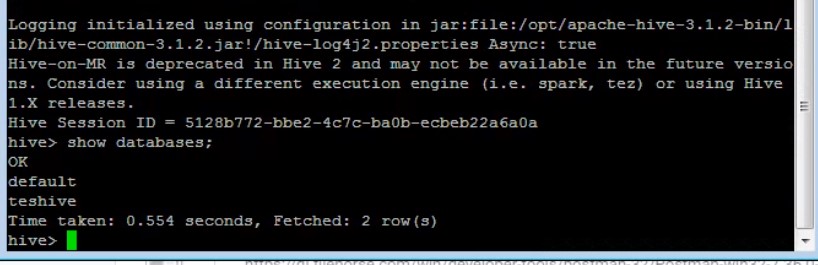

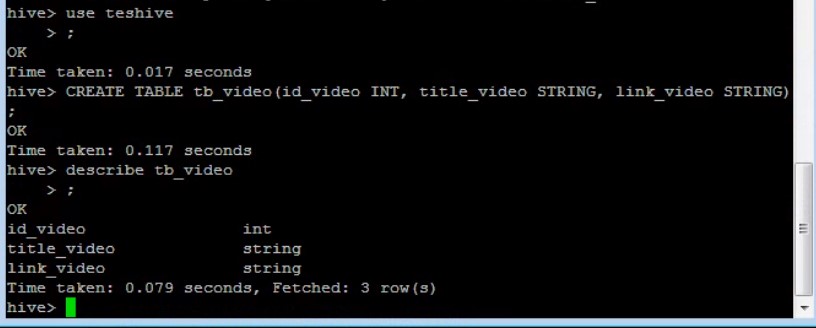

11. Kemudian testing Hive dengan perintah

# $HIVE_HOME/bin/schematool -dbType derby -initSchema

# hive

Testing

.png)

3 Comments

adakah mongodb?

ReplyDeleteAdakan dongg

ReplyDeleteup up up

ReplyDelete